Self-Supervised Model Adaptation for Multimodal Semantic Segmentation

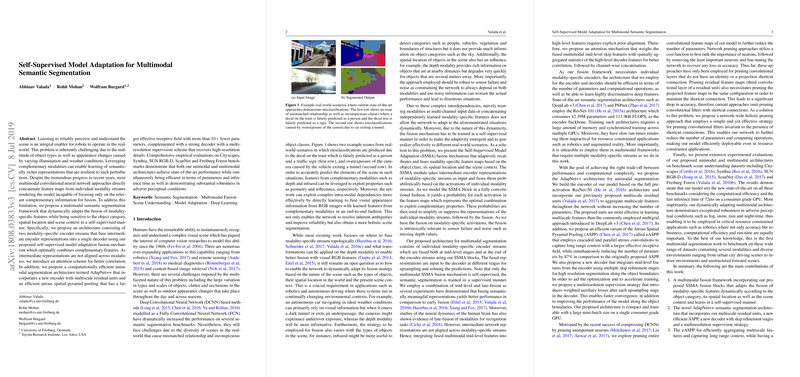

This paper presents an advanced multimodal semantic segmentation framework, designed to address the key challenges in real-world robotic perception. The architecture leverages dynamic, self-supervised fusion of modality-specific features, which integrates object category, spatial location, and scene context to enhance segmentation performance across diverse environments.

The research identifies a crucial gap in conventional multimodal CNN approaches, which predominantly concatenate feature maps without dynamically focusing on relevant modalities. To mitigate this, the authors propose a modular fusion framework incorporating their Self-Supervised Model Adaptation (SSMA) block. This block recalibrates and fuses feature maps from multiple modalities, offering adaptive recalibration based on contextual inputs. Unlike traditional methods, SSMA optimally combines features without explicit supervision, facilitating adaptable fusion strategies.

The framework builds upon a state-of-the-art AdapNet++ architecture, which is renowned for its efficiency and performance in unimodal segmentation. The authors address the need for compactness by introducing a multiscale residual block within the encoder, alongside an efficient atrous spatial pyramid pooling module. These innovations reduce model complexity while enhancing receptive fields, which is crucial for accurately capturing scene context in segmentation tasks.

Complementing the encoder, the authors design a robust decoder architecture, featuring a skip refinement mechanism for improved boundary accuracy. The integration of a novel multiresolution supervision strategy further augments gradient flow and accelerates training convergence, enhancing pixel-level segmentation fidelity.

The paper conducts comprehensive evaluations across several benchmarks, including Cityscapes, Synthia, SUN RGB-D, ScanNet, and Freiburg Forest datasets. These benchmarks encompass a wide array of environments, from urban streets to cluttered indoor scenes and natural forest landscapes. Impressively, the proposed framework consistently achieves superior mIoU scores, outperforming the prior state-of-the-art in multimodal segmentation tasks.

In addition to demonstrating efficiency and robustness, the framework highlights an implicit resilience to distorted inputs, such as glare, fog, and nighttime conditions — aspects often overlooked in conventional models. The self-supervised fusion effectively adapts to these challenging conditions by recalibrating reliance on individual modalities, thereby maintaining robust segmentation outputs.

Methodologically, the work opens avenues for enhanced scene understanding in robotics, deepening multimodal fusion approaches by embracing self-supervision and dynamic adaptation. Practically, adapting to varying environmental conditions without manual tuning or explicit labels reduces the deployment overhead in real-world applications. Theoretically, the approach challenges predetermined fusion strategies, endorsing flexibility and context awareness in model design.

The paper contributes notably to ongoing discussions on efficient multimodal learning, presenting a compelling case for adopting adaptable, self-supervised methodologies in AI research. Future prospects could include extending SSMA blocks to new AI domains, enriching autonomous systems with deeper contextual understanding for more reliable decision-making in complex scenarios. This vision promotes a trajectory toward intelligent systems that seamlessly integrate multispectral data, advancing the frontier of robust autonomous perception and interaction.