Insights on Phrase-Based Neural Unsupervised Machine Translation

The paper, "Phrase-Based Neural Unsupervised Machine Translation," presents an exploration into unsupervised machine translation (MT) leveraging monolingual corpora alone, without the need for parallel data. It introduces a novel approach by combining traditional phrase-based statistical machine translation (PBSMT) methods with neural MT (NMT) strategies to enhance translation accuracy across several language pairs.

Core Contributions

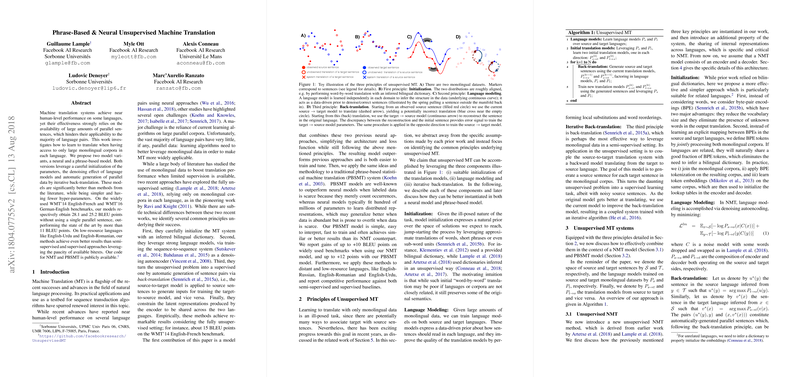

The authors extend existing unsupervised MT methodologies by infusing them with three main principles: initialization, LLMing, and iterative back-translation. These principles guide the development of a system that performs competitively even in the absence of parallel sentence corpora.

- Initialization: The approach emphasizes creating robust initial alignments between source and target languages through bilingual dictionaries and shared BPE tokens, enhancing initial translation quality.

- LLMing: It incorporates strong monolingual LLMs, providing a data-driven prior to improve fluency and coherence in translations.

- Iterative Back-Translation: A cyclic process where source sentences translate to target languages and back, iteratively refining translation models.

Methodology and Results

The paper benchmarks its methods using WMT'14 English-French and WMT'16 German-English datasets among others, emphasizing unsupervised translation in low-resource settings. Strong performance is noted, achieving up to 28.1 BLEU points for the English-French pair without utilizing parallel sentences, marking a significant improvement compared to previous unsupervised techniques.

- PBSMT and NMT Integration: The authors adopt a synergistic method that combines the strengths of PBSMT’s phrase-based mechanics with neural back-translation, effectively capturing linguistic nuances.

- Empirical Success: The experimental results reveal PBSMT as frequently outperforming NMT in unsupervised contexts, with further gains when models are combined.

- Improvements Over Prior Works: The paper outperforms earlier unsupervised benchmarks by over 11 BLEU points, demonstrating its efficacy especially in language pairs lacking substantial parallel data.

Theoretical and Practical Implications

Theoretically, the research provides insights into mapping the space of possible translations with minimal supervision, positioning unsupervised MT as a viable alternative for low-resource languages. Practically, it offers a framework for developing MT systems capable of serving diverse linguistic communities without dependence on large bilingual datasets.

Future Outlook in AI

Future advancements could explore more sophisticated initialization techniques and stronger LLMs to push the boundaries of unsupervised MT. The adaptive integration of neural and statistical models also opens avenues for developing more holistic and robust MT systems.

This paper stands as a crucial contribution to the field of unsupervised MT, pushing toward more inclusive and resource-efficient translation technologies.