An Overview of the Moments in Time Dataset for Event Understanding

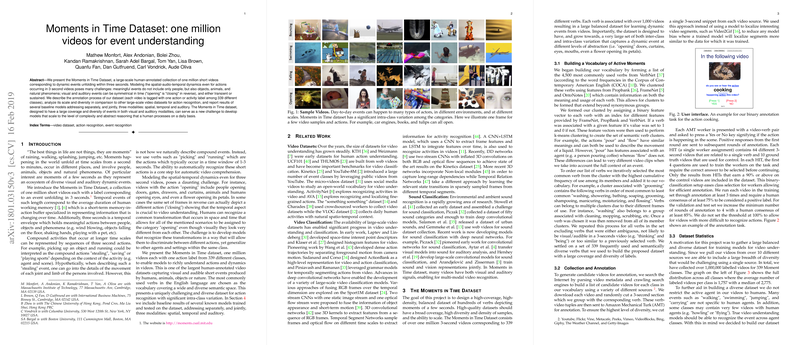

The paper presents the Moments in Time dataset, an ambitious and large-scale effort to create a human-annotated collection of one million 3-second videos. Each video in the dataset depicts dynamic events and is tagged with a label representing one action or activity from a set of 339 classes. This initiative aims to serve as a challenging benchmark for the development of models capable of scaling to complex and abstract reasoning, akin to human perception on a daily basis.

Challenges in Modeling and Annotation

The dataset addresses numerous challenges in modeling spatial-audio-temporal dynamics. 3-second videos might capture transient or sustained events, often involving not just people but also objects, animals, and natural phenomena. The temporal symmetry inherent in some actions—where reversing the video can depict a different action—adds another layer of complexity. Successful action recognition in this context requires discerning common transformations across diverse agents and settings.

The authors detail an extensive annotation process using Amazon Mechanical Turk (AMT), ensuring each selected video snippet corresponds with one of the action classes after rigorous human validation. The vocabularies developed are based on common verbs, grouped into semantic clusters to foster broad coverage and diversity in event types.

Baseline Models and Results

The paper reports outcomes from several baseline models trained on the dataset, analyzing the interaction of spatial, temporal, and auditory modalities. These models include:

- Spatial Models: ResNet50 trained on RGB frames, with variations based on initializations from different datasets (ImageNet, Places).

- Temporal Models: Optical flow methods using Cartesian displacements.

- Auditory Models: SoundNet with pre-trained weights on raw waveform data.

- Ensembles and Multi-modal Approaches: Fusion methods like Temporal Segment Networks (TSN), Inflated 3D Convolutional Networks (I3D), and combinations of modalities through ensemble learning.

The most effective single model, I3D, achieved a Top-1 accuracy of 29.51% and Top-5 accuracy of 56.06%, while an ensemble approach integrating spatial, temporal, and auditory features improved performance to 31.16% and 57.67%, respectively.

Comparative Insights

In comparison with other prominent datasets like UCF101, Kinetics, and HMDB51, Moments in Time offers a significantly diverse range of short videos and actions. The dataset demonstrates comprehensive coverage of recognized objects and scenes based on models trained on standard image datasets like ImageNet and Places, suggesting a robust platform for video understanding.

Experiments indicated that models pre-trained on Moments in Time exhibit competitive transfer learning performance on datasets with longer video sequences, highlighting its potential utility for training generalizable video recognition systems.

Implications and Future Directions

The paper concludes by underscoring the dataset's potential to drive advancements in AI's capability to understand dynamic video content. By presenting a dataset with high intra-class variation and multi-modal dynamics, it establishes a challenging benchmark for future research in machine learning and computer vision. It invites exploration into sophisticated models that can comprehend abstract and complex events through enhanced fine-tuning of spatial-temporal and auditory signals.

This expansive initiative invites further research and innovation in holistic video understanding, pushing the boundaries toward replicating human-level event comprehension in machines.