Reality Oriented Adaptation for Semantic Segmentation of Urban Scenes

The paper "ROAD: Reality Oriented Adaptation for Semantic Segmentation of Urban Scenes" presents innovative methodologies to improve domain adaptation in semantic segmentation by leveraging synthetic datasets. A major challenge addressed is the performance degradation that typically occurs when models trained on synthetic images are deployed in real-world scenarios. This degradation stems from overfitting to the synthetic style and intrinsic distribution differences between synthetic and real images.

Core Contributions

The authors propose two primary mechanisms to address these challenges:

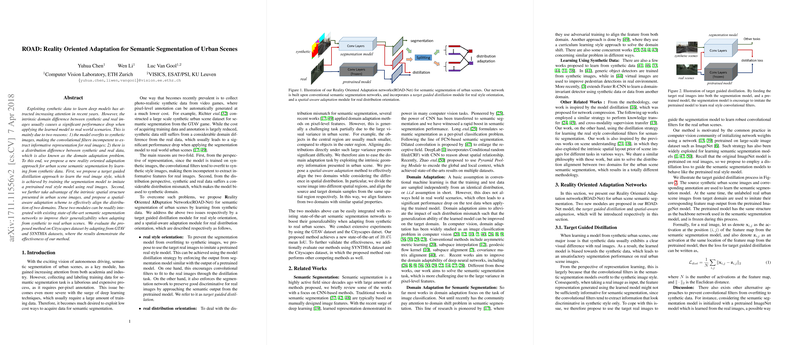

- Target Guided Distillation: This strategy allows the segmentation model to better generalize to real-world images by imitating a pretrained real style model. By feeding target domain real images into both the segmentation model and a pretrained model, the semantic segmentation model learns real image styles. The distillation process encourages the model to align its outputs with those of a pretrained network, preventing overfitting to synthetic features.

- Spatial-Aware Adaptation: To handle domain distribution mismatch, the authors introduce a technique that leverages the intrinsic spatial structures found in urban scenes. This approach involves partitioning urban scene images into several spatial regions and then aligning the domains by focusing on feature alignment within the same spatial partitions between synthetic and real domains. This method considers spatially aware properties to improve domain adaptation, as central objects are typically rendered differently compared to peripheral objects due to perspective distortions.

These methodologies are integrated into the ROAD-Net framework and can be adapted to contemporary semantic segmentation networks such as DeepLab and PSPNet, ensuring enhanced generalizability across unseen, real-world urban scenes.

Results and Validation

The proposed framework was evaluated using the Cityscapes dataset as the target domain, with GTAV and SYNTHIA datasets utilized as source domains. A notable improvement was observed in the mean Intersection over Union (IoU) scores compared to existing methodologies, with ROAD-Net achieving a new state-of-the-art performance of 39.4% mean IoU using PSPNet as the base model. The distillation and spatial-aware modules individually contributed significantly to the performance boost, validating the efficacy of both proposed approaches.

Implications and Future Prospects

The implications of this research lie primarily in autonomous driving and related fields where robust real-time scene understanding is imperative. By reducing data collection costs through the effective use of synthetic datasets and enhancing cross-domain adaptability, ROAD-Net significantly bridges the syn-to-real domain gap.

Future research can explore further enhancements of the spatial-awareness module by incorporating dynamic partitioning methods or introducing temporal information for video datasets. Additionally, expanding the application of ROAD-Net beyond urban scenes to more diverse environments may reveal further strengths and present new challenges, contributing to the ongoing advancement of AI-driven scene understanding.

Overall, the methodologies and results outlined in this paper underscore potential advancements in leveraging synthetic data for real-world applicable AI systems, foreshadowing an era where synthetically trained models operate seamlessly in complex real-world applications.