An Expert Overview of "Learning to Segment Every Thing"

The paper "Learning to Segment Every Thing" presents a novel approach to extend the capabilities of instance segmentation models, traditionally limited to a handful of well-annotated categories, by proposing a partially supervised training paradigm. The authors introduce an innovative method that allows the training of a Mask R-CNN model on an expanded set of categories, using only a subset of classes with mask annotations while leveraging bounding box annotations for a broader range of categories. This marks an important step toward developing instance segmentation models capable of understanding a wider array of visual concepts in the real world.

Key Contributions

- Partially Supervised Instance Segmentation: The paper tackles the inherent limitations in current instance segmentation datasets, which are constrained by a small number of categories due to the high annotation costs associated with segmentation masks. By introducing a partially supervised framework, the authors create a pathway for utilizing abundant bounding box annotations alongside sparse mask annotations.

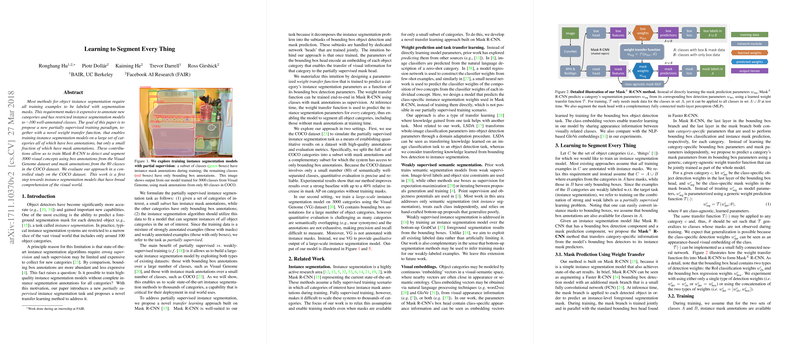

- Weight Transfer Function: Central to their methodology is the development of a weight transfer function. This function predicts instance segmentation parameters for categories that lack mask annotations during training, based on their corresponding bounding box detection parameters. This transfer learning strategy is implemented via a parameterized approach that integrates seamlessly with the existing Mask R-CNN architecture.

- Comprehensive Evaluation: Using controlled experiments on the COCO dataset, the authors demonstrate a significant improvement over baseline methods, with up to a 40% relative increase in mask average precision (AP) for categories that do not have mask annotations during training. The robust testing regime highlights the efficacy of the weight transfer function in enhancing the model's generalization capabilities.

- Large-Scale Instance Segmentation: By applying their techniques to the Visual Genome (VG) dataset, the authors train an instance segmentation model capable of handling 3000 categories. Although VG lacks comprehensive mask labels, the qualitative outputs suggest promising capabilities in segmenting a wide variety of objects, including abstract and complex concepts.

Implications and Future Work

From a practical perspective, the approach proposed in this paper could significantly reduce the resource barriers currently limiting the scaling of instance segmentation models. The ability to expand the set of categories beyond those with costly segmentation annotations implies greater applicability of these models in more diverse and dynamic environments, such as autonomous driving, robotic perception, and large-scale image analysis.

Theoretically, this work opens new vistas in transfer learning and domain adaptation. By demonstrating how bounding box annotations can inform segmentation tasks for unlabelled categories, the paper suggests potential pathways for merging different levels of annotation granularity in machine learning models.

Future developments, as hinted by the authors, could further enhance the quality and fine-tuning of the weight transfer function, possibly incorporating more sophisticated semantic embeddings. Moreover, increasing the set of fully annotated categories strategically, based on the insights drawn from this research regarding transferability and annotation utility, could amplify the efficacy of partially supervised models.

Conclusion

"Learning to Segment Every Thing" makes a compelling case for the integration of partially supervised learning paradigms in extending the capabilities of instance segmentation models. Through rigorous experimentation and methodical innovation, the paper not only advances the current state of instance segmentation but also provides a framework for future research in scaling computer vision tasks to encompass a broader visual ontology with limited labeled data. This research constitutes an important contribution to the field, setting the stage for further exploration into scalable, efficient, and comprehensively capable visual recognition systems.