Insights into the Total-Text Dataset for Scene Text Detection and Recognition

The paper "Total-Text: A Comprehensive Dataset for Scene Text Detection and Recognition" addresses a critical gap in the domain of scene text detection and recognition. The primary contribution of this work is the introduction of the Total-Text dataset, which emphasizes curved text detection—a crucial aspect that is often under-represented in existing datasets. This omission has led to a lacuna in research dedicated to effectively detecting and recognizing text with varied orientations, particularly curved text, in natural scenes.

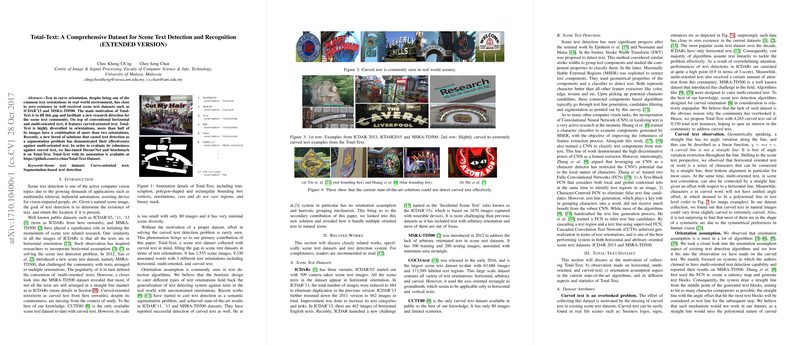

Scene text detection and recognition is a domain of computer vision with significant practical applications, including multimedia retrieval and assistive technologies. However, progress in this field has been somewhat constrained by the limited scope of available datasets that predominantly feature horizontal or multi-oriented text, with minimal to no representation of curved text. This paper introduces a substantial dataset filling this gap by incorporating 1,555 images and 9,330 annotated words with diverse text orientations: horizontal, multi-oriented, and curved.

Interestingly, the Total-Text dataset showcases inclusivity of real-world text orientation diversity, emphasizing that more than half of the dataset's images contain text with a blend of horizontal, multi-oriented, and curved orientations. This diverse representation is significant for developing robust models that generalize well to various text orientations encountered in real-world scenarios. Curved text detection presents unique challenges, spatially and algorithmically, which this dataset helps to illuminate and address.

The authors advocate for the use of polygon-shaped annotations over traditional rectangular bounding boxes to tightly encase curved text, thus providing more accuracy and reducing noise in ground truth annotations. This annotation strategy offers superior precision in evaluating and training models, particularly for text that deviates from standard linear configurations, which is commonplace in actual scenes.

The paper also evaluates the efficacy of semantic segmentation methodologies for text detection by fine-tuning the DeconvNet architecture. Their findings indicate that segmentation-based approaches, albeit with limitations in handling complex backgrounds and differentiating closely situated text instances, hold promise for capturing varied text orientations without explicit angle assumptions or heuristic grouping. The use of DeconvNet highlights the capability for feature map spatial resolution to significantly influence segmentation outcomes, enhancing the prediction of text regions in diverse orientations.

In terms of implications, this research necessitates a reevaluation of text detection strategies to place emphasis on curved text. The Total-Text dataset provides a new benchmark that pushes the envelope for algorithm development toward more comprehensive and generalized text detection systems. Looking forward, the importance of focusing on pixel-level and feature map resolution is evident, as is the anticipation that more sophisticated architectures, possibly incorporating further segmentation layers or attention mechanisms, will emerge to tackle multi-oriented text in unconstrained environments.

This paper sets the stage for future developments where scene text detection algorithms will need to evolve to account for the complexities of text in natural settings, presenting a new frontier that integrates machine learning, pattern recognition, and an understanding of human visual perception within computer vision applications.