Overview of "Fast YOLO: A Fast You Only Look Once System for Real-time Embedded Object Detection in Video"

The paper presents Fast YOLO, an enhancement of the YOLOv2 architecture, aimed at addressing constraints in performing object detection on embedded systems. Fast YOLO achieves real-time video processing on devices with limited computational resources, such as smart phones and surveillance cameras.

Key Contributions

The paper introduces two primary strategies in developing Fast YOLO:

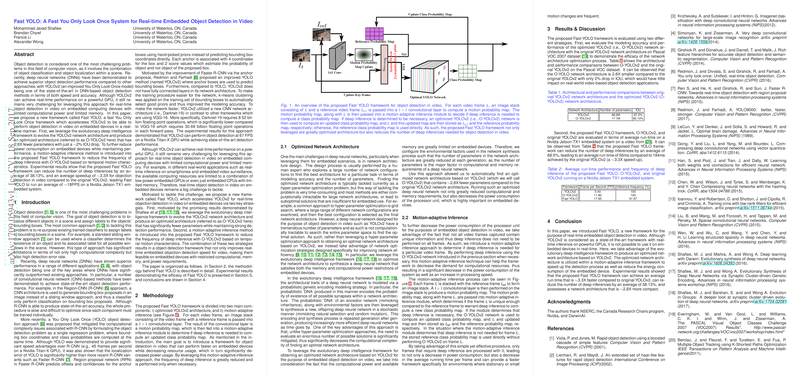

- Optimized YOLOv2 Architecture: Utilizing the evolutionary deep intelligence framework, the authors evolved the network architecture of YOLOv2 to create a streamlined version designated as O-YOLOv2. This optimized architecture exhibits a 2.8-fold reduction in parameters with only a slight decrease (~2%) in Intersection over Union (IOU), a measure of detection accuracy. The reduced parameter set translates to faster inference and lower power consumption, making it suitable for real-time applications in resource-constrained environments.

- Motion-Adaptive Inference: To further enhance efficiency, a motion-adaptive inference method was integrated. This technique assesses temporal motion to decide when full inference is necessary, minimizing the frequency and computational expense of deep inference processes. By doing so, Fast YOLO significantly decreases the number of deep inferences required, reporting an impressive reduction of approximately 38.13%.

Experimental Results

The effectiveness of Fast YOLO is substantiated through experimental evaluations on the Nvidia Jetson TX1 embedded system. Here, Fast YOLO demonstrated a speed-up of approximately 3.3 times over the original YOLOv2, achieving an average of 18 frames per second. These results highlight both the practical benefits in terms of speed and the strategic balance in maintaining detection accuracy despite architectural simplifications.

Implications and Future Directions

The advancements presented in Fast YOLO have noteworthy practical implications for embedded systems in real-time applications such as autonomous vehicles and portable devices. By achieving efficient object detection with constrained resources, Fast YOLO demonstrates potential in enabling edge-based intelligence rather than relying on cloud servers, thus enabling faster and more economically viable real-world implementations.

Theoretically, Fast YOLO opens avenues for further exploration into the synthesis of deep neural networks, specifically in optimizing architectures without significant sacrifices in performance metrics. Future research could assess the application of similar evolutionary frameworks in developing architectures suited for other tasks or domains, perhaps extending beyond video object detection.

In addition, the motion-adaptive inference module presents an interesting aspect of dynamically modulating the inference pipeline based on environmental and contextual understanding. Future work might explore sophisticated models capable of even more nuanced detections of relevance or importance, perhaps through integrating more advanced predictive or context-aware capabilities.

Overall, this paper marks a significant contribution to the field, particularly within the constraints of real-world embedded system environments, offering a framework that adeptly balances speed, resource consumption, and accuracy.