An Overview of Generalized Multimodal Factorized High-order Pooling for Visual Question Answering

The paper "Beyond Bilinear: Generalized Multimodal Factorized High-order Pooling for Visual Question Answering" presents a sophisticated framework aimed at improving the efficacy of visual question answering (VQA) tasks. The research addresses three core challenges in VQA: the derivation of fine-grained feature representations, effective multimodal feature fusion, and robust answer prediction.

Key Contributions and Methodologies

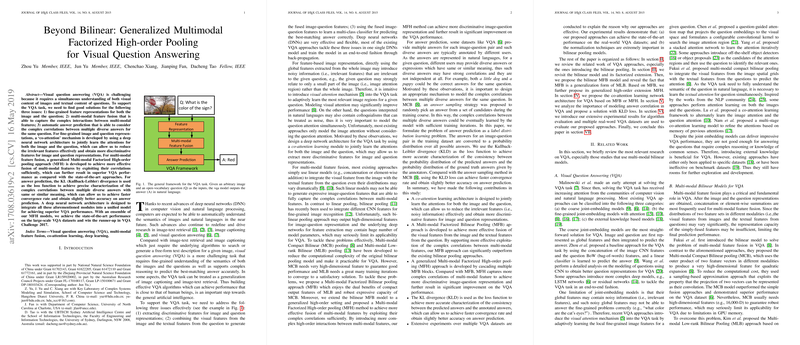

- Co-Attention Mechanism: The paper proposes a co-attention learning framework to jointly evaluate attentions for both images and questions. This approach reduces the noise typically associated with irrelevant features, thereby enhancing the discriminative power of both image and question representations.

- Multimodal Factorized Bilinear Pooling (MFB): The authors introduce MFB as a method to effectively fuse visual and textual features by factoring the bilinear interactions into two lower-dimensional spaces, which mitigates computational complexity while retaining robust representation capabilities. Notably, MFB outperforms conventional bilinear pooling techniques like MCB (multimodal compact bilinear) and MLB (multimodal low-rank bilinear).

- Generalized High-order Model (MFH): Extending beyond bilinear interactions, the proposed generalized high-order pooling model, MFH, utilizes multiple MFB blocks in sequence. This methodology captures more sophisticated feature correlations, offering significant improvements in VQA performance.

- Kullback-Leibler (KL) Divergence for Answer Prediction: The paper highlights the advantage of using KLD as a loss function over traditional sampling methods for aligning the predicted answers with ground truth distributions. This shift allows for faster convergence and better prediction accuracy.

Experimental Results and Implications

The efficacy of the proposed models is evaluated through extensive experiments on the VQA-1.0 and VQA-2.0 datasets. Notably, the MFH model achieved superior performance on several benchmarks, clearly outperforming existing methods that do not incorporate high-order pooling techniques. The integration of GloVe word embeddings further augments this performance, indicating the importance of pre-trained LLMs in understanding and interpreting textual content within the VQA framework.

The authors provide an extensive analysis of normalization techniques, demonstrating their critical role in stabilizing training and enhancing model robustness. Through visualizations, the impact of attention mechanisms on model interpretability and the identification of key question-related regions in images are illustrated, shedding light on the areas where models are making correct or erroneous predictions.

Future Prospects

By advancing from bilinear to generalized high-order pooling, this paper offers a comprehensive approach that has implications for broader algorithmic enhancements in multimodal deep learning networks beyond VQA. The methods can potentially be adapted to other applications requiring integration of visual and textual data, such as image captioning and automated multimedia analysis.

The paper also points toward future research directions in improving model efficiency and interpretability further. Such enhancements may involve scaling these approaches to incorporate additional modalities or further simplifying the computational complexity to accommodate real-time processing constraints.

In summary, this work provides notable advancements in VQA by critically addressing multimodal fusion and learning challenges through innovative model designs, offering a solid foundation for future explorations in this expanding field.