DeepPath: A Reinforcement Learning Method for Knowledge Graph Reasoning

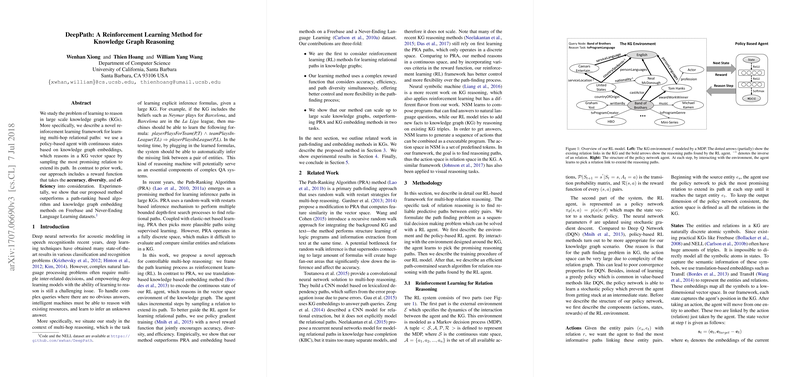

The paper "DeepPath: A Reinforcement Learning Method for Knowledge Graph Reasoning" presents an innovative approach to reasoning within large-scale knowledge graphs (KGs). The authors introduce a reinforcement learning (RL) framework, leveraging a policy-based agent with continuous states, to navigate and learn multi-hop relational paths in a knowledge graph. This approach marks a departure from traditional path-ranking algorithms, such as PRA, by embedding reasoning processes in a continuous vector space and employing a novel reward function that assesses accuracy, diversity, and efficiency.

Key Contributions

The paper's contribution consists of three primary facets:

- Reinforcement Learning Framework for Path Discovery:

- The authors propose a reinforcement learning-based methodology for discovering relational paths. This method encodes entities and relations using knowledge graph embeddings and employs a policy network to explore potential reasoning paths.

- Complex Reward Function:

- A distinctive reward function underpins the learning process. It simultaneously encourages three aspects:

- Accuracy: Ensures that paths lead to correct inferences.

- Diversity: Promotes exploration of varied paths, reducing redundancy.

- Efficiency: Prefers concise paths, optimizing reasoning efficiency.

- A distinctive reward function underpins the learning process. It simultaneously encourages three aspects:

- Empirical Superiority Over Existing Methods:

- Experimental results show the proposed RL method surpasses the performance of PRA and embedding methods on diverse tasks within Freebase and NELL datasets.

Methodology

The core of the proposed technique resides in framing the path-finding challenge as a Markov Decision Process (MDP). The RL agent, equipped with a neural policy network, operates in the KG environment by selecting relations incrementally to form reasoning paths. The network is optimized using policy gradients, thereby learning to prefer paths that maximize the designed reward metrics.

The training process includes:

- Supervised Initialization: In an effort to manage the vast action space effectively, the training begins with a supervised learning phase. Paths generated via a breadth-first search (BFS) serve as guided initial practices for the policy network.

- Reward-based Retraining: Following the initial supervised phase, the network undergoes fine-tuning with the defined reward function, allowing it to refine its path selection strategy.

Experimental Evaluation

The paper evaluates the RL framework on two primary tasks: link prediction and fact prediction across Freebase and NELL datasets. The results indicate:

- Link Prediction: The proposed method improved mean average precision (MAP) scores, often surpassing both PRA and state-of-the-art embedding techniques.

- Fact Prediction: The RL framework demonstrated superior performance, highlighting its strength in recognizing valid reasoning paths.

Moreover, the method identified a smaller yet effective set of reasoning paths compared to PRA, highlighting its path-finding efficiency.

Implications and Future Directions

This work provides a compelling alternative for multi-hop reasoning in KGs, paving the way for further exploration in knowledge-based AI systems. The incorporation of diverse and efficiency-driven path-finding strategies broadens the utility of KGs in complex question-answering systems.

Future research could delve into adversarial training mechanisms to refine reward functions and potentially integrate textual data to enrich KG reasoning. A focus on scenarios where KG path information is sparse could extend the applicability of this approach.

In conclusion, the paper presents a technical advancement in KG reasoning, leveraging reinforcement learning to develop a flexible, efficient, and empirical approach to multi-hop reasoning tasks.