An Essay on "Data Decisions and Theoretical Implications when Adversarially Learning Fair Representations"

The paper "Data Decisions and Theoretical Implications when Adversarially Learning Fair Representations" presents an investigation into the challenge of developing ML models that ensure fairness, particularly when sensitive attributes of data are not readily available. Authored by Alex Beutel et al., the research is positioned within the ongoing discourse on fairness, accountability, and transparency in machine learning, specifically focusing on biases introduced by imbalanced datasets.

Methodological Approach and Key Experiments

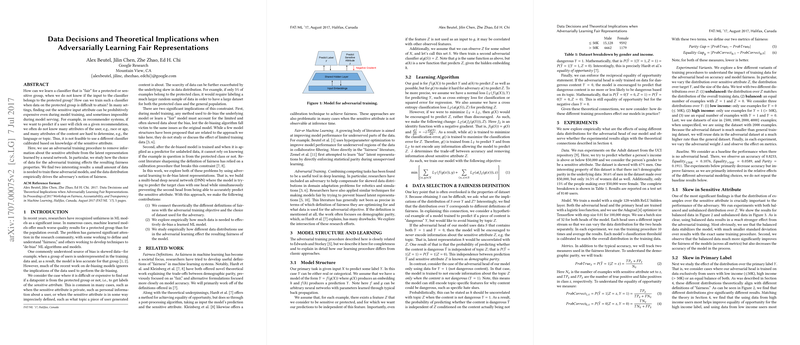

The authors tackle the problem of fairness by employing adversarial training to remove sensitive attribute information from the latent representations within neural networks. The core components of this approach involve a two-headed deep neural network where one head is designed to predict the target class, while an adversarial head attempts to predict the sensitive attribute. The underlying concept is to optimize the model such that the sensitive attribute's information is not learnable from the latent space.

Key insights from the empirical analysis involve varying the data distribution fed into the adversarial component during training. The researchers explored different scenarios based on (1) balancing the sensitive attributes, (2) skewing data according to the target class (e.g., high or low income), and (3) altering the size of the adversarial training set. These variations allowed the authors to empirically validate connections between fairness metrics and the chosen data distributions.

Theoretical Contributions and Definitions

The paper makes significant theoretical contributions by linking adversarial training processes with various definitions of fairness in the ML literature, such as demographic parity and equality of opportunity. The authors assert that the data distribution chosen for adversarial training implicitly determines the fairness context of the resulting model. For instance, employing a balanced dataset with respect to the sensitive attribute enhances demographic parity by steering the model towards independence from these attributes.

Highlighting the nuances of fairness, the paper situates itself within existing theoretical frameworks while extending them through the adversarial learning paradigm. By targeting the internal representations learned by ML models, the paper proposes a rigorous approach to achieving fairness that bypasses direct reliance on sensitive attribute labels during model serving.

Practical Implications and Future Directions

This work has practical implications in domains like recommender systems and automated decision systems where sensitive attribute data, such as race or gender, might not always be accessible. By showing that even small adversarial training datasets suffice to improve fairness-related outcomes, the authors set a pragmatic benchmark for practitioners dealing with limited information on sensitive attributes.

From a theoretical perspective, pursuing unbiased latent representations might align or conflict with performance, depending on the weight assigned to adversarial training objectives. Herein lies a trade-off explored in the paper; thus, fine-tuning the adversarial influence (determined by parameter λ in the model) offers a promising direction for further research and application.

Future works can build upon this paper to refine the balance between model fairness and accuracy. Investigating similar adversarial training frameworks under different fairness definitions or exploring adaptability to multi-head adversarial setups with multiple sensitive attributes presents intriguing paths for exploration.

Conclusion

In conclusion, "Data Decisions and Theoretical Implications when Adversarially Learning Fair Representations" adds to the conversation on fair ML by deeply exploring how data decisions impact fairness in adversarially trained models. Through both theoretical and empirical lenses, the paper reinforces the significance of dataset composition in adversarial regimes and sets the groundwork for subsequent exploration in creating equitable ML systems.