Emergence of Language with Multi-agent Games

This essay provides an overview of the research presented by Havrylov and Titov on the emergence of language in multi-agent systems, specifically focusing on how agents can learn to communicate using sequences of symbols. The paper addresses a significant topic within the domain of artificial intelligence, exploring the intersection of multi-agent systems and emergent communication protocols.

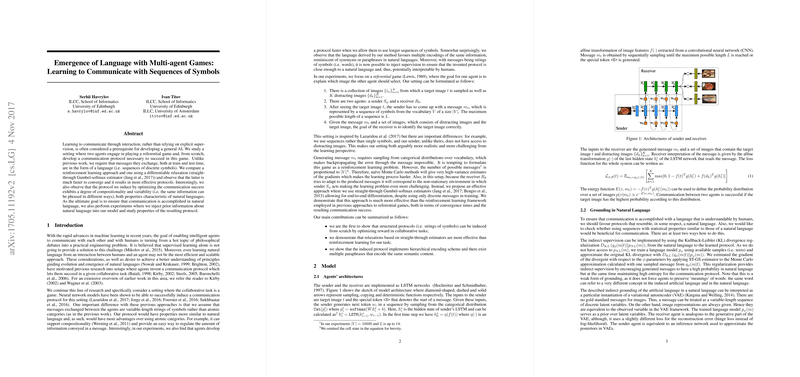

The central premise of the paper revolves around training agents to develop communication protocols in an emergent manner through interaction within a structured environment. The researchers utilize a game-theoretic approach, framing the learning process as a series of cooperative games where agents must collaboratively achieve specific objectives. The communication is facilitated through the exchange of sequences of symbols, mimicking the fundamental aspects of language.

A notable aspect of the methodology involves the use of reinforcement learning to drive the learning process. Agents are equipped with neural network architectures, specifically designed for sequence processing, enabling them to generate and interpret symbolic messages effectively. The experiments conducted demonstrate that agents can indeed develop communication strategies that are both efficient and effective in achieving predefined goals within the game scenarios set by the researchers.

The numerical results are compelling. Agents were able to converge on a shared communication protocol with high efficiency across multiple trials, indicating the robustness of the proposed learning framework. The paper includes quantitative evaluations that highlight the agents' ability to adapt to varying tasks, showcasing a flexibility akin to early stages of language acquisition seen in biological entities.

The implications of this research are multifaceted. Practically, it presents a scalable model for developing communication protocols in multi-agent systems, which could be applicable in areas such as robotics and distributed AI systems. Theoretically, it contributes to the understanding of language emergence, offering insights that could inform linguistics and cognitive science by providing a computational perspective on how communication systems might originate and evolve.

Looking forward, this line of research could see developments in several directions. One possibility involves increasing the complexity of the environments and tasks to further test the limits of emergent communication. Another area of interest could be the integration of these systems with external inputs, such as visual data, to simulate more realistic scenarios where multi-modal communication is necessary.

In conclusion, Havrylov and Titov's work on learning to communicate within multi-agent games stands as a noteworthy contribution to the field of emergent communication in AI. It lays foundational principles for further exploration and offers a robust framework for understanding how artificial systems can develop their own languages.