Dense-Captioning Events in Videos: A Comprehensive Analysis

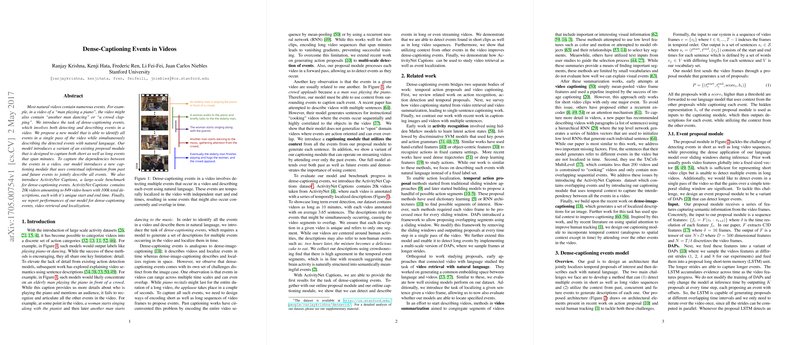

The paper "Dense-Captioning Events in Videos" by Krishna et al. presents a novel task in the field of video understanding, which intertwines event detection and natural language description. This investigation explores simultaneous detection and description of multiple events within a video, broadening the scope of video analysis from mere classification towards richer semantic annotation.

Key Contributions

- Dense-Captioning Model: The proposed model operates in two core stages:

- Event Proposal Module: An extension of the Deep Action Proposals (DAPs) framework, this module can detect events spanning varied temporal lengths in a single forward pass through the video. By using multi-scale temporal features, the model efficiently captures both short and long events.

- Captioning Module with Context: This module leverages contextual information from surrounding events to generate descriptive sentences. This aspect of the model is particularly innovative, as it uses past and future event information to enhance the generated descriptions.

- ActivityNet Captions Dataset: The authors introduce a large-scale dataset specifically for dense-captioning, consisting of 20,000 videos annotated with over 100,000 captions. The dataset is rich in diversity and duration, providing a robust benchmark for the task. Each video contains multiple captions, with event descriptions temporally localized, often overlapping.

Methodology

Event Proposal Module

The event proposal module is designed to accommodate the detection of events across different temporal scales. By sampling video frames at different strides (e.g., 1, 2, 4, 8) and utilizing an LSTM-based approach, the module outputs event proposals at each time step. This method surpasses traditional sliding window approaches, enhancing efficiency and scalability.

Captioning Module with Context

To address the interdependencies between events, the captioning module incorporates contextual information through a novel mechanism. The module categorizes events into past and future contexts relative to a reference event. It computes contextual representations using attention mechanisms, which allow it to weigh neighboring events selectively, thereby generating more coherent and contextually accurate captions.

Evaluation

Dense-Captioning Results

The evaluation of dense-captioning performance relies on classical metrics such as BLEU, METEOR, and CIDEr, along with the temporal intersection over union (tIoU) for localization accuracy. Through experiments, it is demonstrated that incorporating contextual information (both past and future) significantly improves captioning performance. The model's capacity to describe events enhances with the inclusion of temporal context, validating the hypothesis that events within a video are highly interrelated.

Event Localization

The paper presents a thorough examination of the event proposal module's efficacy in localizing events. By evaluating recall against varying numbers of proposals and tIoU thresholds, it is evident that multi-scale sampling improves the recall, particularly for long-duration events. This multi-stride approach ensures a comprehensive temporal coverage, accommodating events of diverse lengths.

Retrieval Tasks

The authors also address video and paragraph retrieval tasks, showcasing the versatility of their model. In these tasks, the model retrieves the correct video or paragraph given a set of descriptions or vice versa. The inclusion of contextual information enhances retrieval performance, highlighting the model's robustness in understanding complex video semantics.

Implications and Future Directions

Practical Implications

This work has significant practical implications for several domains. In content recommendation and video summarization, the ability to generate detailed descriptions of multiple events can enhance user experience by providing richer metadata. In surveillance and security, dense-captioning can facilitate the detection and understanding of complex activities, improving situational awareness.

Theoretical Implications

From a theoretical perspective, the paper advances the field by emphasizing the role of context in video understanding. Future research could explore more sophisticated methods for context incorporation. Another intriguing direction is the extension of this approach to real-time video analysis, where the current online model could be further optimized.

Future Developments

Potential future developments in AI could involve integrating dense-captioning models with other modalities such as audio and text, paving the way for multimodal video understanding. Moreover, exploring transformer-based architectures could yield improvements in capturing long-range dependencies, potentially surpassing the current LSTM-based methods.

Conclusion

Overall, "Dense-Captioning Events in Videos" by Krishna et al. makes significant strides in video understanding by combining event detection with natural language descriptions. The introduction of contextual information marks a substantial contribution, underscoring the interconnected nature of events within videos. The ActivityNet Captions dataset provides a valuable benchmark for future research, fostering advancements in the dense-captioning task.