Exploring the Versatility of Model-Agnostic Meta-Learning (MAML) in Fast Adaptation

Introduction

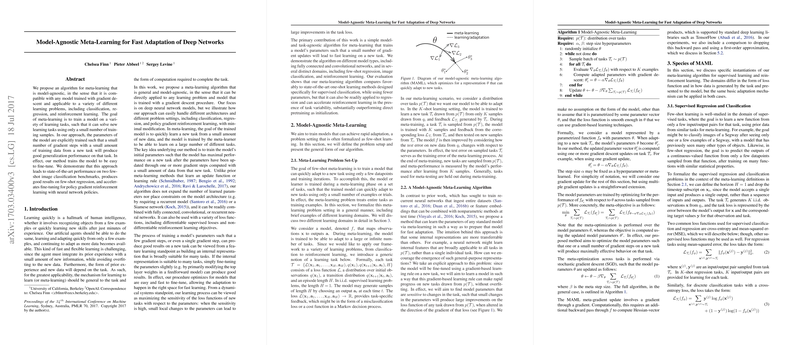

Model-Agnostic Meta-Learning (MAML) presents a significant advancement in the domain of machine learning, particularly in the arena of fast adaptation of deep networks across a diverse range of learning tasks. Proposed by Chelsea Finn, Pieter Abbeel, and Sergey Levine, this algorithm introduces a generalized framework capable of adapting to new tasks with minimal data requirements, fostering efficiency in learning new information. The essence of MAML lies in its ability to train model parameters such that minimal gradient updates can significantly improve performance on unseen tasks.

Core Concepts

Meta-Learning Mechanics

At the heart of MAML is the meta-learning concept where the model is trained to learn. The model is specifically optimized such that even a small number of gradient steps and a limited data set from a new task lead to effective generalization. This characteristic empowers rapid learning across tasks varying from classification and regression to reinforcement learning.

Model and Task Agnosticism

One of the prominent features of MAML is its model and task agnosticism. It is compatible with any model trainable through gradient descent. This flexibility extends to various learning problems without necessitating modifications to the underlying architecture, thereby streamlining the adaptation process across diverse learning tasks.

Algorithmic Structure

MAML operates by initially training a model on a variety of learning tasks. During this meta-learning phase, the algorithm intentionally optimizes the model's parameters so that a modest number of gradient steps using new task data significantly enhance task performance. This approach, unlike previous meta-learning strategies, does not expand the model's parameter space nor imposes architectural constraints, enabling widespread applicability.

Evaluation and Implications

Experiments Across Domains

MAML demonstrated its efficacy through rigorous experiments across several domains. Notable performances were observed in few-shot image classification benchmarks, where it achieved state-of-the-art results, few-shot regression tasks, and in accelerating policy gradient reinforcement learning. These results underscore the algorithm's robustness and its potential to serve as a foundational tool for rapid learning across varied applications.

Practical and Theoretical Contributions

The versatility and efficiency of MAML indicate profound practical implications, particularly in fields requiring rapid adaptation to new information with limited data. Theoretically, it offers a fresh perspective on meta-learning, emphasizing the significance of internal representation and parameter initialization for fast learning. This paradigm shift opens new avenues for future research focused on optimizing these aspects to enhance learning speed further.

Future Directions

Given the success of MAML, future investigations might explore deeper into the realms of unsupervised and semi-supervised learning scenarios. Further refinement of the algorithm could involve developing more sophisticated meta-optimization techniques or exploring alternatives to gradient descent for even faster adaptation capabilities. Additionally, incorporating domain-specific challenges could refine the algorithm's applicability and effectiveness.

Conclusion

MAML represents a milestone in meta-learning, offering a powerful, model-agnostic algorithm capable of fast adaptation across a broad spectrum of learning tasks. Its simplicity, combined with broad applicability and demonstrated efficacy, positions MAML as a valuable contribution to the field of artificial intelligence. As we move forward, continued exploration and optimization of MAML could significantly advance our capabilities in developing agile, adaptable machine learning systems.