Arbitrary-Oriented Scene Text Detection via Rotation Proposals

The paper by Ma et al. proposes a novel framework for detecting text in natural scene images that can have arbitrary orientations. This approach addresses the limitations of previous text detection methods that primarily focus on detecting horizontally aligned text regions. The key contribution of this work is the introduction of Rotation Region Proposal Networks (RRPN), which generate text proposals that include orientation information.

Key Contributions

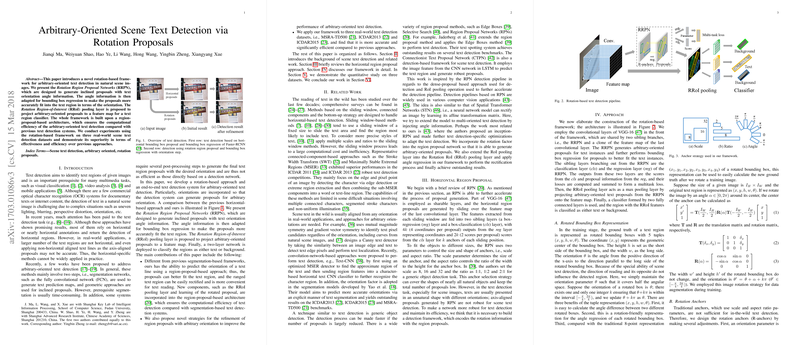

- Rotation Region Proposal Networks (RRPN): The RRPNs are designed to create inclined bounding boxes that provide orientation data. This design ensures that the generated proposals are more accurately aligned with the text regions, regardless of their orientations.

- Rotation Region-of-Interest (RRoI) Pooling Layer: This new layer projects the arbitrary-oriented proposals onto a feature map for text region classification. The RRoI pooling layer adjusts to the orientation of the text, making the detection process more precise compared to traditional RoI pooling methods.

- Comprehensive Evaluation: The framework was tested on three benchmark datasets—MSRA-TD500, ICDAR2013, and ICDAR2015—showing its effectiveness in detecting text across various levels of complexity and orientation.

Numerical Results and Claims

The RRPN-based framework demonstrates significant improvements over previous methods in both accuracy and efficiency. For instance, evaluations on the MSRA-TD500 dataset show a precision of 82%, recall of 69%, and an F-measure of 75%, whereas prior state-of-the-art methods achieved an F-measure of 76% at best. Notably, the system's runtime is around 0.3 seconds per image, showcasing its computational efficiency.

In the case of ICDAR2015, the RRPN method yields a precision of 84%, recall of 77%, and an F-measure of 80%, outperforming other contemporary approaches. On ICDAR2013, the method achieves a precision of 95%, recall of 88%, and an F-measure of 91%, indicating its robustness and adaptability even for horizontally-aligned text datasets.

Practical and Theoretical Implications

Practically, this research presents a substantial step forward for applications in multimedia tasks, video analysis, and mobile applications where text can appear in various orientations. The efficiency demonstrated in the paper suggests that the RRPN framework could be implemented in real-time systems, benefiting a range of real-world scenarios such as autonomous driving, augmented reality, and document analysis.

Theoretically, the incorporation of orientation in text detection frameworks opens new research avenues for improving object detection systems by encoding spatial information. Future developments could explore the integration of this approach with more complex neural network architectures like Inception-RPN for even higher accuracy.

Future Directions

Future work may involve refining the rotation proposals further to handle extreme cases of text orientation and distortions. Additionally, expanding the framework to handle curved text or more complex text shapes could widen its applicability. Enhancing the learning process with larger and more diverse datasets would also contribute to improving the robustness of the detection system. Researchers might also investigate the integration of this framework with end-to-end recognition systems to streamline the text detection and recognition pipeline.

Conclusion

This paper highlights the efficacy of incorporating rotation information in scene text detection frameworks, addressing a significant gap in current text detection methodologies. The RRPN and RRoI pooling innovations enhance both accuracy and computational efficiency, making this approach suitable for a wide range of applications and setting a new benchmark in arbitrary-oriented text detection research.