Multi-agent Reinforcement Learning in Sequential Social Dilemmas

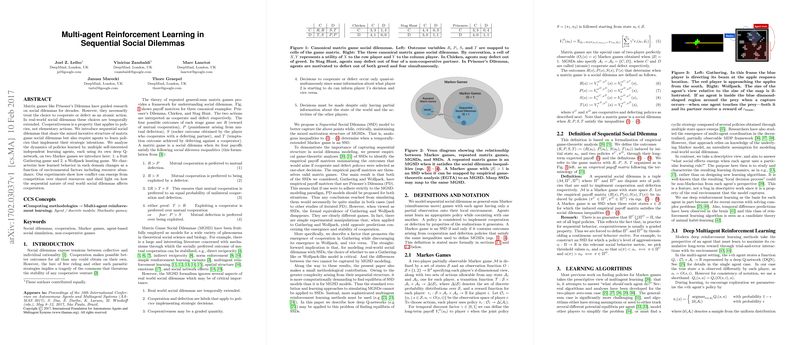

The paper "Multi-agent Reinforcement Learning in Sequential Social Dilemmas" addresses a notable gap in modeling social dilemmas where choices are temporally extended rather than atomic. The authors introduce the concept of Sequential Social Dilemmas (SSDs) to capture complex dynamics in scenarios more representative of real-world dilemmas, as opposed to traditional Matrix Game Social Dilemmas (MGSDs).

Core Contributions

The authors analyze the adaptation of multi-agent reinforcement learning (MARL) to SSDs through a series of experiments using deep Q-networks (DQNs) in two Markov games: the Gathering game and the Wolfpack game. These games illustrate situations where cooperation and defection emerge from agents learning policies over time. Unlike MGSDs, SSDs extend beyond static decision-making to encompass continuous interaction dynamics.

Game Analysis and Results

- Gathering Game: Agents collect apples while avoiding or engaging with others using tagging. The dynamics reflect competition over limited resources, where tagging acts as a proxy for defection. Results indicate agents exhibit more aggressive, defecting behavior in resource-scarce environments.

- Wolfpack Game: Agents (wolves) collaborate to catch a prey. Unlike Gathering, cooperation here directly enhances rewards through group captures. The paper finds empirical payoff matrices aligning with well-known MGSD types, including Prisoner’s Dilemma, underlining the complexity of SSDs.

- Environmental and Agent Parameters: The authors investigate how factors like temporal discount rates, batch size, and network capacity influence the emergence of defection. These factors mimic social psychology considerations, shedding light on the organic development of cooperation or defection in learned policies.

Implications and Future Work

The findings highlight that SSD frameworks can better emulate complex, sequential decision-making scenarios, capturing important nuances not visible in MGSD models. The emergence of differing levels of cooperation and defection as functions of environmental dynamics has direct implications for modeling real-world social interactions and informs potential policy-making in social systems management. Moreover, acknowledging the computational complexities involved, the adaptation of sophisticated MARL techniques to SSDs paves the way for nuanced exploration of cooperative trends in AI systems.

In pursuing further research, the robustness of these metrics across varied environments and agent architectures warrants attention. Opportunities exist to extend this approach to diverse fields, including economics and social policy, where the crafting of cooperative frameworks is critical.

Overall, this research challenges the static assumptions inherent in traditional models, advocating for a more dynamic approach to understanding and influencing agent interactions in complex social landscapes.