Abstractive Text Summarization using Sequence-to-sequence RNNs and Beyond

The paper "Abstractive Text Summarization using Sequence-to-sequence RNNs and Beyond" by Nallapati et al. explores the application of Attentional Encoder-Decoder Recurrent Neural Networks (RNNs) to the task of abstractive text summarization. Abstractive summarization aims to generate a concise summary that captures the core ideas of a text using paraphrasing, unlike extractive summarization which selects and reproduces text segments directly from the source. This paper highlights critical challenges in abstractive summarization and proposes several novel models to address these issues, achieving state-of-the-art performance on multiple datasets.

Key Contributions

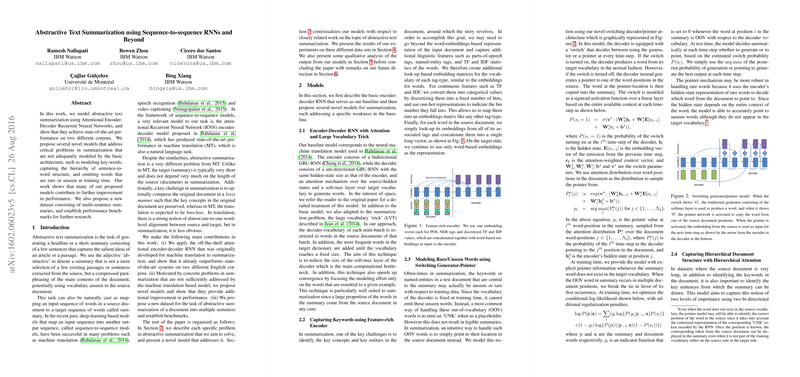

- Baseline Attentional Encoder-Decoder RNN: The authors adopt the attentional sequence-to-sequence model, initially developed for machine translation, to abstractive summarization. This baseline model already surpasses existing methods on two English corpora.

- Feature-rich Encoder: Recognizing the importance of identifying key concepts and entities, this model augments word embeddings with additional linguistic features like parts-of-speech (POS) tags, named-entity tags, and term frequency-inverse document frequency (TF-IDF) statistics. This comprehensive representation aids in better capturing the document's main ideas.

- Switching Generator-Pointer Model: Traditional encoder-decoder models struggle with rare or unseen words. The switching generator-pointer model introduces a mechanism that allows the generator to either emit a word from the target vocabulary or point to a specific word in the source document. This dynamic switching addresses the limitation of fixed vocabulary, effectively handling out-of-vocabulary words during summarization.

- Hierarchical Attention Model: Summarizing long documents necessitates recognizing both important sentences and keywords. This model incorporates two levels of bidirectional RNNs at the word and sentence levels, with attention mechanisms operating at both tiers. Sentence-level attention re-weights the word-level attention to capture the hierarchical structure of documents more accurately.

- Multi-sentence Summarization Dataset: The paper also presents a new dataset for document summarization, constructing it from an existing question-answering dataset. This corpus features longer documents and multi-sentence summaries, posing additional challenges such as managing thematic coherence across multiple sentences.

Experimental Evaluation

The research benchmarks the novel models on three datasets: the Gigaword corpus, the DUC 2003/2004, and the newly introduced CNN/Daily Mail corpus. Using metrics like Rouge F1 scores, the authors demonstrate substantial improvements over prior methods:

- The baseline attentional encoder-decoder model outperforms traditional summarization techniques on the Gigaword and DUC datasets.

- The feature-rich encoder and hierarchical attention models further enhance performance by better capturing document structures and linguistic intricacies.

- The switching generator-pointer model shows significant improvements, particularly in handling rare and unseen words, although its impact on overall performance varies across datasets.

Implications and Future Work

The proposed models bridge the gap between extractive and abstractive summarization, particularly through the switching generator-pointer mechanism, which offers a balanced approach to leverage source text while enabling paraphrasing. The hierarchical attention model's ability to process document structure provides a robust framework for handling longer texts, aligning the summarization process more closely with how humans summarize large bodies of information.

Future work could focus on optimizing these models for multi-sentence summaries, addressing issues such as repetitive content generation within the summaries. Additionally, exploring intra-attention mechanisms to enhance summary diversity and coherence could be beneficial. The introduction of the CNN/Daily Mail dataset encourages further research into complex summarization tasks, fostering the development of models capable of generating coherent, multi-sentence summaries that maintain contextual integrity.

In conclusion, this paper makes significant strides in the domain of abstractive text summarization, providing sophisticated models that address key challenges and offering a robust foundation for future research endeavors. The integration of advanced RNN architectures, innovative attention mechanisms, and comprehensive feature engineering underscores the potential of deep learning approaches in advancing the state of the art in text summarization.