``Why Should I Trust You?'' Explaining the Predictions of Any Classifier

In the paper titled "``Why Should I Trust You?'' Explaining the Predictions of Any Classifier," Ribeiro, Singh, and Guestrin address the opacity of machine learning models by proposing a novel method for model interpretation—Local Interpretable Model-agnostic Explanations (LIME). The core aim is to enhance trust in machine learning models by making their predictions understandable to human users, thus transforming traditionally opaque models into ones that can be scrutinized and improved based on user interaction.

Contributions

The paper presents two principal contributions:

- Local Interpretable Model-agnostic Explanations (LIME):

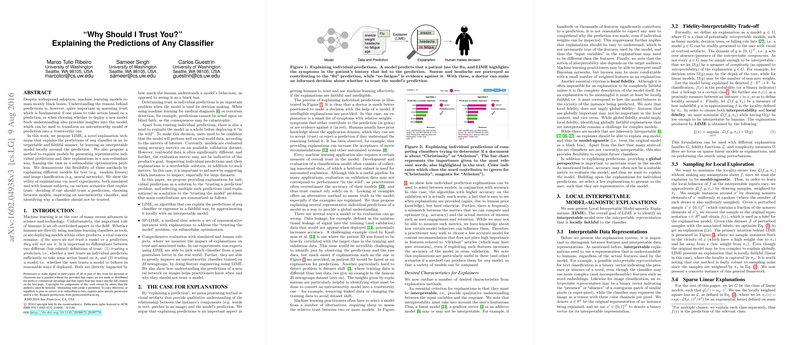

- Objective: To explain individual predictions of any machine learning model through locally interpretable models.

- Method: For a given prediction, LIME approximates the black-box model with an interpretable model in the locality of the prediction. This is achieved by perturbing the input data, obtaining predictions for these perturbed samples, and then fitting an interpretable model (like a sparse linear model) that approximates the black-box model locally.

- Features: LIME maintains both local fidelity (faithfulness to the black-box model in the prediction's neighborhood) and interpretability (simplicity and comprehensibility of the explanation).

- Submodular Pick for Explaining Models (SP-LIME):

- Objective: To select a representative set of instances and their explanations that provide insight into the model's behavior as a whole.

- Method: SP-LIME uses submodular optimization to identify a diverse set of instances whose explanations collectively cover the various behaviors of the model, thereby giving a global perspective without redundancy.

Empirical Validation

The paper includes comprehensive experiments to demonstrate the utility of LIME and SP-LIME in various contexts:

- Simulated User Experiments:

- LIME outperforms other explanation methods like Parzen windows and greedy feature removal in terms of recall of important features and trustworthiness evaluation.

- SP-LIME helps users effectively choose between classifiers and aids in better feature engineering by filtering out untrustworthy features.

- Human Subject Experiments:

- Users were able to identify which classifier would generalize better and explain its predictions accurately using LIME explanations.

- In feature engineering tasks, non-experts significantly improved classifier performance by removing spurious features identified through LIME, demonstrating the practical utility of these explanations.

Theoretical and Practical Implications

The introduction of LIME and SP-LIME has several significant implications:

- Trust and Adoption: By making the predictions of any classifier interpretable, these methods can enhance user trust, which is fundamental for the deployment and acceptance of machine learning models in sensitive applications such as healthcare and security.

- Model Improvement: The insights gained from LIME explanations enable users to identify and rectify issues like data leakage and dataset shift, leading to improved model performance and robustness.

- Future Work: The framework is adaptable to various model classes and domains, suggesting potential applications in fields like image, speech, and text classification. Future work could explore the use of different families of interpretable models and further optimize the computational efficiency of LIME.

Conclusion

This paper presents a significant advancement in the interpretability of machine learning models, providing tools that can explain predictions of any model in an interpretable manner. The empirical results validate the effectiveness of LIME and SP-LIME, demonstrating their utility in improving trust, facilitating model selection, and aiding in feature engineering. This work paves the way for more transparent and trustworthy machine learning applications, addressing a critical need in the field.