An Essay on "The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems"

The paper by Lowe et al., titled "The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems," provides a comprehensive dataset specifically curated for the development and benchmarking of dialogue systems. The Ubuntu Dialogue Corpus stands out due to its substantial size, with nearly 1 million multi-turn dialogues encapsulating over 7 million utterances and a total of 100 million words. This essay offers a detailed overview of the dataset, its significance, and the benchmarks presented.

Dataset Composition and Motivation

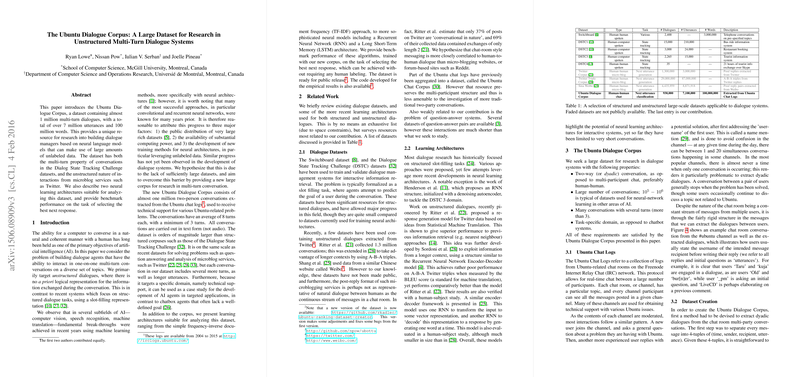

The motivation behind creating the Ubuntu Dialogue Corpus is rooted in observing a gap within the dialogue systems research landscape. Unlike in fields such as computer vision or machine translation, dialogue systems have not achieved comparable breakthroughs, which can be attributed to the limited availability of large-scale and rich datasets. Traditional dialogue datasets like the Dialogue State Tracking Challenge (DSTC) primarily focus on structured dialogues with predefined slot-filling tasks. There are also datasets derived from microblogging platforms like Twitter, but these often lack the multi-turn complexity characteristic of natural human conversations.

The Ubuntu Dialogue Corpus addresses these constraints by providing a large dataset extracted from the Ubuntu IRC logs, which contain extensive two-person technical support conversations. Each conversation on average consists of 8 turns, extensively covering a specific domain, namely technical support. This offers an excellent opportunity to develop dialogue systems that can handle real-world, domain-specific interactions.

Dialogue Extraction and Dataset Characteristics

The dialogues in the corpus are extracted using a heuristic method that ensures conversations are between two distinct users, focusing particularly on technical support exchanges. The extraction involves identifying recipient users via name mentions and segmenting dialogues into coherent exchanges. This process filters out non-contributory or irrelevant utterances, thus ensuring the dataset remains clean and contextually rich.

The dataset's primary attributes are noteworthy: it comprises 930,000 dialogues, averaging 10.34 words per utterance and 7.71 turns per conversation. This positions it significantly above the scale of typical structured dialogue datasets and makes it particularly valuable for training sophisticated neural architectures.

Benchmarking Neural Learning Architectures

The paper also benchmarks several learning architectures using this dataset, ranging from traditional frequency-inverse document frequency (TF-IDF) methods to advanced neural models like Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM) networks. To evaluate the effectiveness of these methods, the authors propose the task of next utterance selection. This task involves predicting the appropriate response from a set of potential responses, given a dialogue context.

The TF-IDF approach serves as a baseline, leveraging cosine similarity to select responses based on term importance. In contrast, the RNN and LSTM models incorporate sequential dependencies and contextual embeddings, respectively. The RNN and LSTM models are trained using a Siamese network architecture, which processes context and response pairs to learn effective embeddings.

The empirical results demonstrate that the LSTM architecture markedly outperforms both RNN and TF-IDF models. Specifically, the LSTM model achieves a Recall@1 of 87.8% in binary classification (1 in 2), and a Recall@1 of 60.4% within a larger pool (1 in 10), underscoring its superior ability to capture long-term dependencies and semantic nuances in the dialogues.

Implications and Future Directions

The implications of this research are multifaceted. Practically, the Ubuntu Dialogue Corpus provides a robust foundation for training high-performance dialogue systems that can be deployed in customer service, technical support, and other interactive AI applications. Theoretically, the dataset facilitates further exploration into more sophisticated neural network architectures and their ability to model intricate, multi-turn dialogues. The LSTM's performance particularly indicates the potential of advanced sequence models in capturing dialogue context more effectively than rudimentary methods like TF-IDF.

Future developments in AI can leverage this dataset to experiment with more intricate models, such as Transformer-based architectures or hybrid models combining statistical and neural approaches. Additionally, the corpus can be used to explore new evaluation metrics tailored for dialogue generation tasks, potentially leading to more nuanced and context-aware dialogue systems.

Conclusion

The introduction of the Ubuntu Dialogue Corpus marks a significant step forward in dialogue systems research. By addressing the limitations of existing datasets and providing a varied, extensive corpus, this work paves the way for the development of AI agents capable of engaging in complex, multi-turn discussions within specific domains. The benchmarks provided offer a solid foundation for future advancements in dialogue system architectures and their applications.