A Neural Conversational Model

Conversational modeling is an essential task in natural language processing and machine intelligence. "A Neural Conversational Model" by Oriol Vinyals and Quoc V. Le presents a novel approach leveraging a sequence-to-sequence (seq2seq) framework to predict the next sentence based on the previous conversation context. Unlike traditional models, the authors' method requires minimal hand-crafted rules and extensive feature engineering, promising generality and simplicity.

Technical Approach

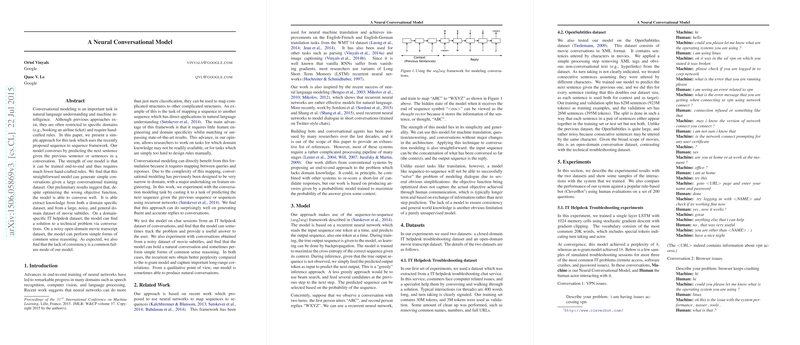

The approach employs a recurrent neural network (RNN), specifically a Long Short-Term Memory (LSTM) network, to map sequences of words into responses. The seq2seq framework reads the input sequence token-by-token and predicts the output sequence in the same manner. The network's hidden state, upon receiving the end-of-sequence symbol, serves as a "thought vector" representing the semantic information of the input. The model is trained to maximize the cross-entropy of the correct sequence given its context. During inference, a "greedy" approach or beam search is used to generate responses.

Datasets

Two datasets were utilized for experimentation: an IT helpdesk troubleshooting dataset and the OpenSubtitles movie transcript dataset. The IT helpdesk dataset, a domain-specific collection, encompasses 30M tokens for training and 3M for validation. In contrast, the OpenSubtitles dataset is an open-domain dataset that includes 923M tokens for training and 395M tokens for validation.

Experimental Results

IT Helpdesk Dataset

For the IT helpdesk dataset, a single-layer LSTM with 1024 memory cells was trained. The model achieved a perplexity of 8, outperforming an n-gram model that scored 18. The trained model provided practical, contextually relevant troubleshooting assistance in simulated conversations. For instance, when users described issues accessing VPN, the model engaged in a robust diagnostic dialogue, which included asking for operating system details, suggesting URL checks, and offering specific commands, ultimately resolving the problem.

OpenSubtitles Dataset

The OpenSubtitles dataset, characterized by its size and noisiness, was handled using a two-layer LSTM with 4096 memory cells. This model achieved a perplexity of 17 compared to 28 for a smoothed 5-gram model. Despite the noisy nature of the dataset, the model generalizes well, producing plausible answers. Sample conversations covered a range of topics, from general knowledge and philosophy to morality and personal opinions. The model demonstrated a capability to maintain context and provide consistent responses across varied questions, albeit with occasional inconsistencies, such as in defining moral actions or maintaining a coherent personality.

Human Evaluation

In a comparative evaluation against CleverBot, involving 200 questions and multiple human judges, the Neural Conversational Model (NCM) was preferred in 97 instances, compared to 60 for CleverBot, with 20 ties and 23 disagreements. This suggests that the NCM, despite some limitations, often produced more satisfactory and contextually appropriate responses.

Discussion and Implications

The model's ability to generate coherent, contextually relevant dialogues without explicit rules or feature engineering highlights the potential of data-driven approaches in conversational AI. However, the authors acknowledge limitations, particularly in establishing consistent personality traits and maintaining context over long interactions. Future work should address these challenges—potentially through improved objective function formulations that align closer with human conversational goals and integrating memory networks or attention mechanisms to enhance model coherence and context retention.

Furthermore, despite promising preliminary results, scaling this approach to support diverse conversational domains and more complex forms of dialogue remains a significant challenge. Enhancements in training data quality, model architecture, and objective functions are critical next steps. Additionally, defining robust metrics beyond perplexity, such as user satisfaction or task completion rates, will be crucial for practical deployment in real-world applications.

In summary, this paper presents an innovative attempt to develop end-to-end neural conversational models. Its findings suggest significant potential for such models, though substantial advancements are required to achieve truly human-like conversational agents.