Analysis of "LCSTS: A Large Scale Chinese Short Text Summarization Dataset"

The paper under review presents "LCSTS," a comprehensive dataset aimed at addressing the scarcity of large-scale resources for Chinese short text summarization. Authored by Baotian Hu, Qingcai Chen, and Fangze Zhu, the work focuses on leveraging data from Sina Weibo, a prominent Chinese microblogging platform, to build a dataset intended for training and evaluating natural language processing models.

Core Contributions

The authors describe several key contributions:

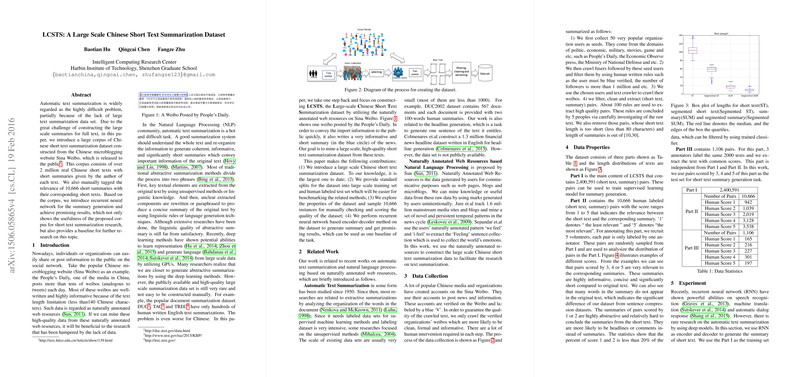

- Dataset Construction: The LCSTS dataset contains over 2.4 million Chinese short text and corresponding summary pairs, sourced from Sina Weibo. The dataset is noted for being the largest of its kind, providing a substantial increase in available data for short text summarizations in Chinese. The dataset is divided into training and evaluation sections, with a comprehensive human-annotated test set comprising 10,666 manually verified summaries for relevance.

- Benchmarking: The paper provides benchmarks using recurrent neural networks (RNNs), establishing a baseline for other researchers in the field. Two RNN configurations are tested, with and without the inclusion of context during the decoding phase.

- Evaluation of Summarization Models: By employing RNN-based encoder-decoder architectures, the authors have set a precedent in using deep learning for Chinese summarization tasks. Their character-based model outperformed word-based models, suggesting character-level processing may be more effective for Chinese text due to the issue of out-of-vocabulary words in a word-based approach.

Experimental Outcomes

The results indicate significant achievements with the RNN models, particularly when context is included. The character-level RNN model, incorporating context, achieved ROUGE-1, ROUGE-2, and ROUGE-L scores of 0.299, 0.174, and 0.272, respectively. This performance underscores the potential of neural networks to grasp semantic content and generate coherent short text summaries from a robust dataset.

Data and Methodology Analysis

Collecting data from verified Sina Weibo accounts ensures the quality and informativeness of the content, a significant consideration given the propensity for noise in user-generated content. The rigorous criteria for seed user selection and filtering methods guarantee that the resultant dataset is both high quality and representative of typical microblogging content.

Notably, the authors address challenges inherent in sequence-to-sequence modeling for abstractive summarization, such as handling rare words and generating contextually appropriate summaries. The manual scoring process and dataset partitioning into training and test sets offer structured benchmarks for future comparative studies.

Implications and Future Directions

This research has profound implications for NLP research, particularly within the domain of Chinese language processing. The creation of LCSTS lowers barriers to entry for researchers aiming to improve summarization technologies for Chinese short texts. By establishing a large-scale dataset, this work catalyzes further investigation into machine learning architectures capable of handling diverse text forms.

Potential future developments include further refinement of hierarchical models to better reflect the structure of short texts, as well as advancements in handling rare word challenges. The exploration of other deep learning techniques, such as hierarchical RNNs or Transformer architectures, could yield further improvements in model performance. Additionally, extending this work to document-level summarization could open new avenues for tackling the challenges posed by larger text bodies.

Overall, the LCSTS paper is pivotal in its focus on data scalability and methodological rigor, setting a standard for subsequent research in the field of automatic text summarization, particularly for morphologically-rich languages such as Chinese.