Two-Stream Convolutional Networks for Action Recognition in Videos

In the paper "Two-Stream Convolutional Networks for Action Recognition in Videos," Karen Simonyan and Andrew Zisserman propose a novel architecture designed to improve action recognition in video sequences. This research addresses the inherent complexity of video data, specifically the challenge of incorporating both spatial and temporal information.

Contributions

The primary contributions of this paper are three-fold:

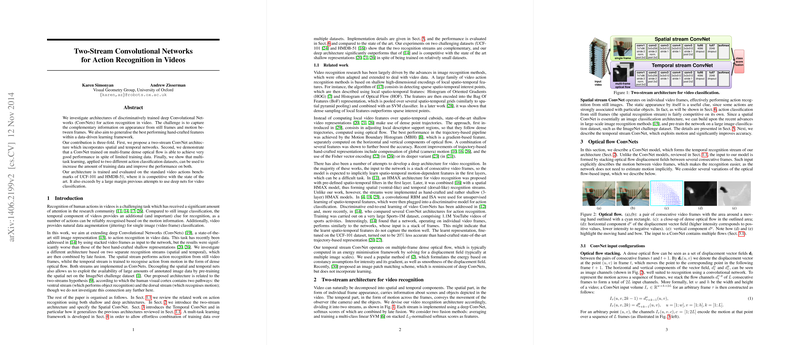

- Two-Stream ConvNet Architecture: The authors introduce a bi-stream network comprising spatial and temporal ConvNets. The spatial stream operates on individual video frames, leveraging the information contained in static images, while the temporal stream processes dense optical flow derived from multiple consecutive frames to capture motion dynamics.

- Training on Optical Flow: It is demonstrated that ConvNets trained on multi-frame dense optical flow can achieve high performance even with limited video training data.

- Multi-Task Learning: By employing a multi-task learning approach, trained on multiple action classification datasets (UCF-101 and HMDB-51), the paper illustrates how this method can effectively increase training data volume and overall performance.

Methodology

The architecture builds upon previous work but innovatively decouples the spatial and temporal information processing. This is a significant departure from earlier methods by Karpathy et al., which only stacked video frames for learning. The spatial ConvNet leverages pre-training on large image datasets like ImageNet and fine-tunes on video action datasets. The temporal ConvNet, on the other hand, is uniquely trained on sequences of dense optical flows, allowing it to capture motion correlations more effectively than using raw frames alone. The final classification is achieved by fusing softmax scores from both streams, using either simple averaging or a more sophisticated SVM classifier.

Numerical Results

The proposed model was evaluated on standard benchmarks (UCF-101 and HMDB-51). On UCF-101, the spatial ConvNet alone achieved 72.7% accuracy, while the temporal ConvNet reached 81.0%. When combined via score fusion, the architecture yielded 87.0% accuracy. On HMDB-51, the two-stream model attained 59.4% accuracy after exploiting multi-task learning. These results are competitive with, and in several aspects surpass, the state-of-the-art at the time, demonstrating the efficacy of the two-stream approach for action recognition in videos.

Theoretical and Practical Implications

The two-stream architecture offers multiple theoretical and practical implications:

- Theoretical Implications:

- The architecture suggests a clear segmentation of visual processing, reminiscent of the human visual cortex model (with ventral and dorsal pathways).

- It prompts further inquiry into specialized network designs that handle spatial and temporal data separately.

- Practical Implications:

- This model can be directly applied to various domains such as surveillance, sports analysis, and human-computer interaction, where understanding human actions in video is crucial.

- The use of pre-training on large image datasets combined with fine-tuning on task-specific video data suggests a productive pathway for developing robust video action recognition systems even with limited video-specific training data.

Future Developments

Looking ahead, the model points towards several promising avenues for future research:

- Large-Scale Training Data: Scaling the architecture to very large datasets (e.g., Sports-1M) could further enhance performance. This, however, entails significant computational challenges.

- Combining Features: Integrating trajectory-based pooling or explicitly handling camera motion could address the limitations remaining in current shallow representations.

- Extended Architectures: Exploring fusions between this approach and end-to-end spatio-temporal learning models may yield architectures capable of surpassing the limitations of each individual method.

Conclusion

Simonyan and Zisserman's two-stream convolutional network architecture for action recognition in videos contributes significantly to the field by effectively disentangling and leveraging both spatial and temporal information. This model not only advances theoretical understanding but also offers practical benefits and guides future research directions in video analysis using deep learning.