An In-Depth Examination of the Bandits with Knapsacks (BwK) Model

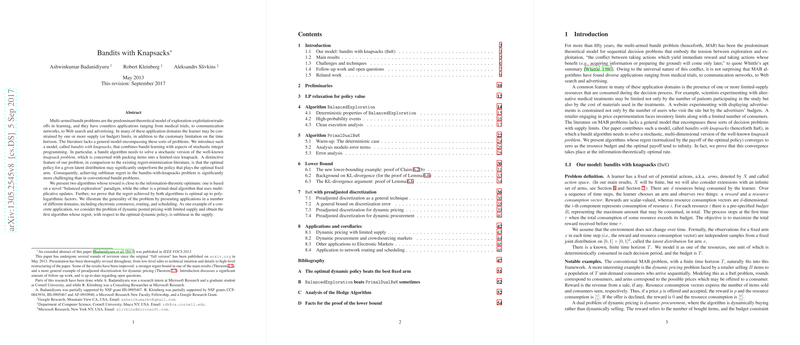

The paper "Bandits with Knapsacks" presents an innovative model addressing a significant gap in the multi-armed bandit (MAB) literature by incorporating budgetary constraints into the exploration-exploitation framework. This model, known as Bandits with Knapsacks (BwK), extends the classical MAB problem by integrating stochastic integer programming elements, thereby accommodating limited-supply resources, alongside the standard time horizon constraint.

Problem Formulation and Model

The BwK model is centered on a decision-maker (learner) who has at their disposal a finite set of actions (arms). Each arm, when played, yields a random reward and consumes multiple resources, each bounded by a specific budget constraint. The learner's objective is to maximize the total expected reward while adhering to these supply limits. The novelty of this model lies in its ability to encompass various applications that involve resource constraints, such as dynamic pricing, ad allocation, and electronic commerce.

The model's formulation highlights the complexity of BwK compared to traditional bandit problems, as it necessitates the simultaneous management of multiple constraints and potential rewards. The optimal policy for BwK could significantly outperform any fixed-arm strategy, underscoring the enhanced challenge of achieving sublinear regret.

Algorithmic Contributions

The paper introduces two principal algorithms designed to approach the upper bound of the achievable reward within the BwK framework, each optimal up to polylogarithmic factors on the regret scale:

- Balanced Exploration: This algorithm applies a new paradigm of balanced exploration within confidence bounds. It dynamically updates the set of potentially optimal distributions over arms, focusing on exploring arms that are not evidently suboptimal, thereby ensuring a balanced approach in resource allocation.

- Primal-Dual BwK: This algorithm employs a primal-dual technique, using multiplicative updates to adjust resource costs iteratively. The dual variables represent estimated resource costs, guiding the selection of the most "cost-effective" arms. This approach ingeniously extends the multiplicative weight methods typically used in different contexts, innovatively adapted to the BwK setting by utilizing dual space adjustments, which is a departure from their traditional application in optimization techniques.

Analytical Insights and Lower Bound Implications

The paper establishes regret bounds for the proposed algorithms relative to the optimal policy's reward. The regret of these algorithms is sublinear in the budgets and optimal policy value, ensuring their performance improves with increased resources and horizon. Furthermore, the paper provides matching lower bounds, articulating that these regret functions are optimal up to logarithmic factors. The lower bound proof is particularly compelling, using a carefully constructed example to demonstrate the fundamental limitations in any BwK algorithm.

Applications, Generalizations, and Future Directions

The generality of the BwK model is underscored through discussions of various practical applications across fields such as dynamic pricing, inventory management, ad allocation, and procurement. Notably, the paper also explores generalizations to include cases with contextual information (contextual bandits) and adaptive pricing scenarios, setting a foundation for significant advancements in operations research, machine learning, and economics.

The results on discretization highlight the challenge in handling continuous action spaces, a recurring issue in many practical applications. Techniques such as preadjusted discretization have been extended to address complexities in infinite action spaces like dynamic pricing and procurement with variable supply limits.

Conclusion

The paper makes substantial contributions both theoretically and practically. By creating the BwK framework, it broadens the applicability of bandit models in resource-constrained environments and offers novel algorithmic solutions to tackle these complex challenges efficiently. Future research can further explore the potential of BwK in more nuanced settings, including adversarial environments or more intricate budgetary dynamics, ensuring this model's continued relevance and impact in decision sciences and beyond.